#home-server #python

What's on my Home Server 2024

Published Sep 29, 2024 by Alik Ulmasov

For the past few years, I’ve run a home server for fun. I occasionally get questions about it, so I’ve decided to jot down how my setup works.

By the way, I really recommend doing home-server-as-a-hobby – I’ve learned so much from it, and nowadays there are lots of good resources including podcasts, software lists, discords, subreddits, etc.

That said, my setup is a bit unusual. Since I like to understand each moving piece, I’ve written some of my own scripts. You can find them in this github repo, and each one is designed to work independently from the others.

Hardware-wise, my server is nothing special – it’s my old 2012-era computer. It runs a headless Ubuntu Server 22.04 LTS, and draws about 29 watts from the wall during normal use.

Data

I’m really into the concept of data sovereignty, and in the past few years I’ve managed to get off of cloud services for all my data (except for email, for which I use Fastmail burners).

For backing up data, I follow the “3-2-1” approach, meaning I have 3 copies of my data: 2 local ones (hard drives in my house) and 1 remote one (Backblaze).

Two Copies Locally

The code for this is here.

It’s just two big hard drives inside the server. These drives only contain my personal data, the OS of the server is on a separate drive. One of the hard drives contains all my data, and the other contains an exact copy of all my data, and it’s synced by my script at 1 AM every night.

Even though there are two drives with the same data, It’s intentionally not RAID 1, for two reasons:

-

If bad data is written to the main drive, I want to give myself some time to fix the issue until the backup drive is affected (in my case, that’s until 1AM).

-

In my setup, the backup drive only gets written to once a day. The main drive gets a lot of writes, and so it gets physical wear-and-tear. Since my two drives were bought at the same time, I don’t want them to also fail at the same time.

My script has guard rails. I’m very worried about accidentally nuking all my data, and just doing rsync --delete isn’t exactly safe. Therefore my script does a dry-run of the sync before actually doing it, and won’t run at all if the difference in data between the drives is larger than 30GB.

One Copy remotely

The code for this is here.

I backup my data to a Backblaze B2 bucket using restic. It’s encrypted, compressed, and incremental. It costs me only cents per month, and I really enjoy being able to just run restic diff <snapshot-1> <snapshot-2> to see all the files that changed between any two points in time.

I use pass to store the Backblaze credentials, and the python script runs restic backup. The first backup took 11 hours, but each run after that is fast.

Services

By my last count, my server runs 13 different services, including Syncthing, Octoprint, and Mealie.

Some are run in Docker, some are simply installed on the system.

Docker

The code for this is here.

For Docker, I have a dockers git repo, where each service has a folder with just two files: the compose yaml, and a backup.txt config file which specifies the volumes that need to be backed up. This lets me version control the configuration, and makes it easy to recreate a container if something goes wrong.

├── service1

│ ├── backup.txt

│ └── compose.yaml

├── service2

│ ├── backup.txt

│ └── compose.yml

└── service 3

├── backup.txt

└── compose.yml

Separately, I have a script which runs at 2AM every night which downs each container, backs up all specified volumes, updates each container, brings them back up, and then finally runs image prune -f. I use Dockge to monitor the containers otherwise.

Subdomains and Reverse Proxy

On my network, each service is accessible by a domain like service.ulmasov.com.

I have Cloudflare DNS entry for *.ulmasov.com that points to the server’s local network IP, and my Pi-hole also has a DNS entry that points there (in case the internet is down).

So for example, food.ulmasov.com points to the server, and then my server resolves it to Mealie using an Nginx reverse proxy.

The code for this is here.

I generate the nginx server blocks myself. I have a file that maps each subdomain to its appropriate port:

service1.ulmasov.com | 5000

service2.ulmasov.com | 5001

...

My script generates the appropriate nginx server blocks for each service. I’ve noticed that some services (like Dockge and Octoprint) need specialized server blocks, so the script handles that.

Network

I don’t have any ports exposed to the internet, because I’m scared of doing that.

Thankfully, though, I have Tailscale, which allows me and others to use my self-hosted services from outside of my network.

My server is connected to the tailscale network, and advertises its own port as a subnet router. That allows the service.ulmasov.com domains to still work through the tailscale network.

I also have an Odroid-C4 running Pi-hole. The Pi-hole is also connected to the tailscale network, and all of the tailscale network’s DNS requests go through the Pi-hole.

Other

Logging

The code for this is here.

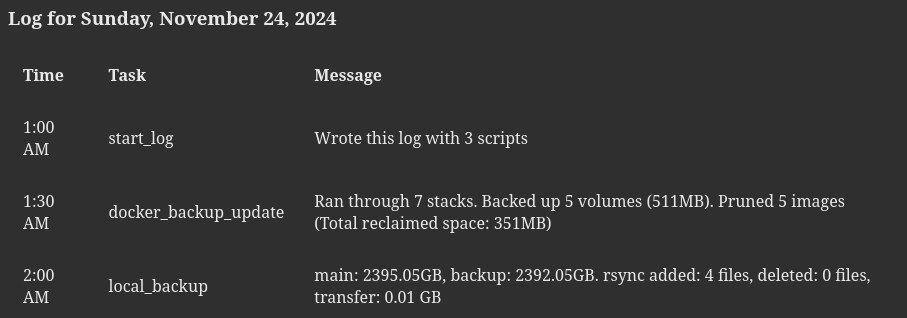

Whenever one of my scripts runs, it updates a row in a markdown file. At the end of each night, that markdown file gets turned into an HTML file which shows the last 10 days of logs, and is hosted by the server, and looks like this:

I have this page in my home page when I open my browser, and error messages get flagged in big red letters to alert me if something has gone wrong.

3D Printer

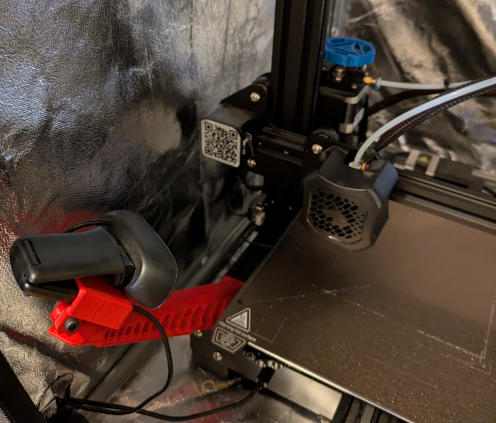

In the picture above, you can see that the server is sitting next to a big fireproof bag which has an Ender 3 V2 in it. The server runs Octoprint, which allows me to easily interact with the printer, including starting and stopping prints.

I also have a webcam which is plugged into the server, and attached to the 3D printer using this 3D printed arm. This allows me to watch the prints, including while I’m away from home (via Tailscale), and I can remotely stop them, if necessary.

Git

While I use Github for most things, I have a few Git repos I want to keep private. I just set those up on my server as a bare git repo (using git init --bare). I can then interact with it from all devices on my network (or through Tailscale) by pointing to that bare repo as a remote.

© 2024 Alik Ulmasov